Hi, I'm Srinivas Rao,

a senior undergraduate student at Indian Institute of Technology Kanpur.

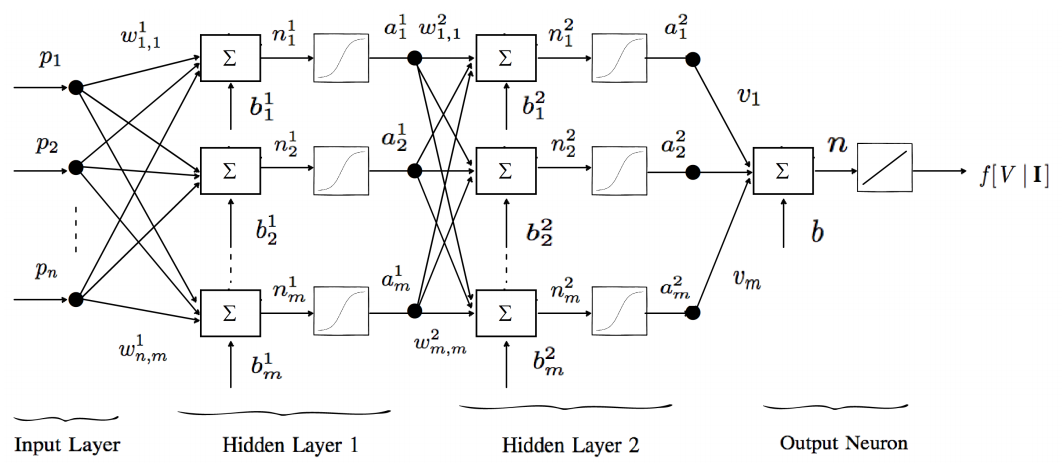

At my university I am a part of the Visual Computing Lab supervised by Prof. Vinay Namboodiri. My research interests are Computer Vision, Deep Learning and Computer Graphics (VR/AR).

Besides being an student, I also currently work part-time as a research consultant for Fyusion Inc. (a 3D computer vision and machine learning company based in San Francisco) where I solve computer vision related problems using deep learning. A recent Techcrunch post about the company can be found here.

Last summer I was a research intern at Fyusion Inc, San Francisco and the summer before that, I was a research intern at the wonderful Graphdeco team at Inria Sophia Antipolis, France.

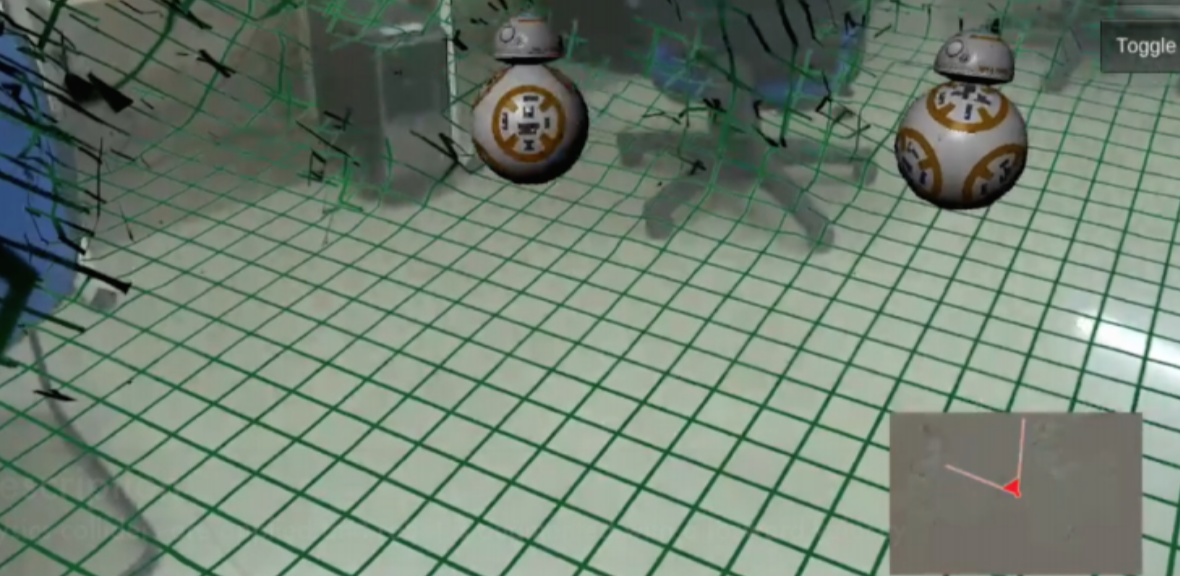

Besides research, I also like to tinker with various new sensors like Google Tango, Oculus Rift, Leap Motion in my free time. Sometimes, I try to post about some of these experiments on Youtube. I like open-source philosophy and hence I also try to open-source some of my experiments on github.

News

- I will be attending Siggraph Asia 2017, Bangkok, Thailand.

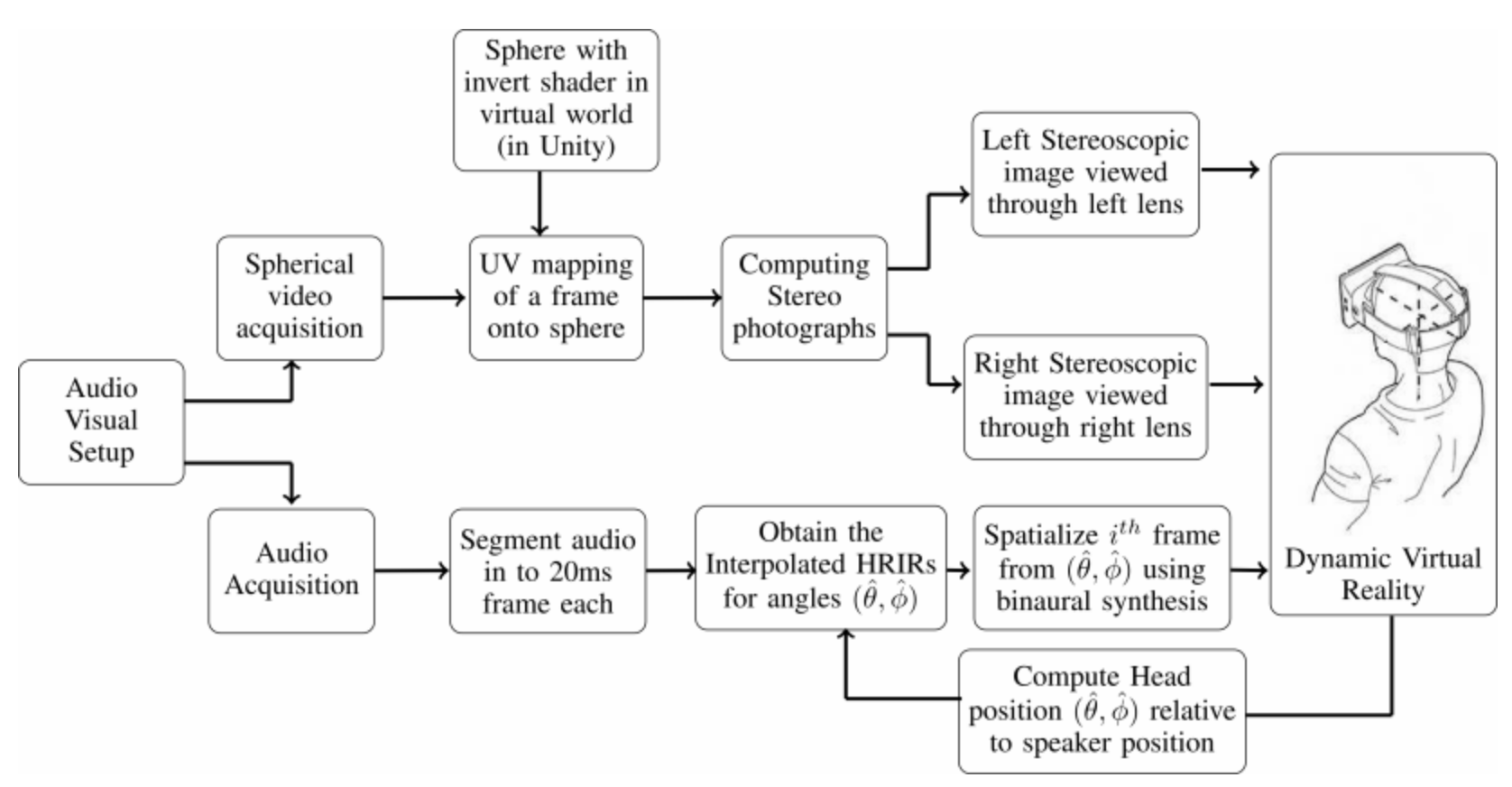

- I will be presenting my paper at ISMAR 2017, Nantes, France.

- I will be interning at Fyusion Inc., San Francisco for the summer of 2017.

- I will be interning at Graphdeco, Inria Sophia Antipolis for the summer of 2016.